Python class, dict, named tuple performance and memory usage

While most would say to use C or C++ or Rust or C# or Java… I decided I wanted to look at the edges of Python performance and memory usage. Specifically, I set out to figure out the best approach for efficiently approximating C structs or classes that are more about properties than functionality.

I wanted to find what Python3 “class” or dictionary was most memory efficient but also fast for creating, updating and reading a single object. I chose to look at the following:

- Python dict (the old standby)

- Python class

- Python class with __slots__ (this idea was added after suggestion from an engineer)

- dataclass

- recordclass (still beta)

- NamedTuple an extension of collections

In the end I borrowed from these gists to create some Python code to test all of the above. Then I also found a “total size” function to estimate the size of the data structures in memory from this gist. Here’s the code to measure and test Python’s performance and memory usage.

The test involved the following:

- Create dictionary of 100,000 objects for each of the various “classes”.

- Read each of object entry value.

- Read a sub-property of the each entry.

- Read and make a small calculation of two sub-properties.

- Make a top-level change/overwrite to each object.

- Read a property via a class method rather than directly.

- Change/overwrite a property via a class method rather than directly.

- Measure the memory footprint of the 100,000 object dictionary

Raw performance and memory test results

| Test | Python dict | Python class | Python class + slots | dataclass | recordclass | NamedTuple |

|---|---|---|---|---|---|---|

| creates / sec | 369,377 | 264,405 | 354,373 | 274,175 | 418,359 | 307,269 |

| reads / sec | 17,076,394 | 24,402,513 | 25,380,031 | 21,810,119 | 28,528,798 | 24,930,480 |

| sub-reads / sec | 8,383,577 | 7,880,326 | 10,508,616 | 7,355,073 | 8,597,880 | 6,196,159 |

| read + calc / sec | 1,434,553 | 1,386,120 | 1,478,981 | 1,183,287 | 1,298,735 | 1,129,088 |

| top-level change / sec | 6,586,636 | 5,969,859 | 8,098,519 | 6,318,340 | 7,849,210 | 849,501 |

| class read / sec | 1,437,646 | 1,165,268 | 1,265,406 | 1,143,719 | 1,101,867 | 1,000,530 |

| class update / sec | 10,171,955 | 4,178,176 | 5,626,841 | 4,727,680 | 4,761,006 | 787,321 |

| bytes per entry (memory) | 658 | 170 | 178 | 170 | 154 | 346 |

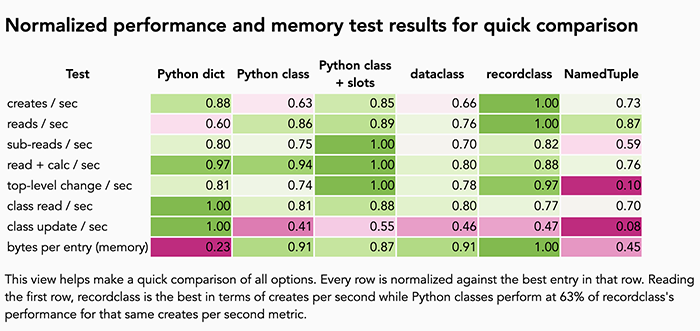

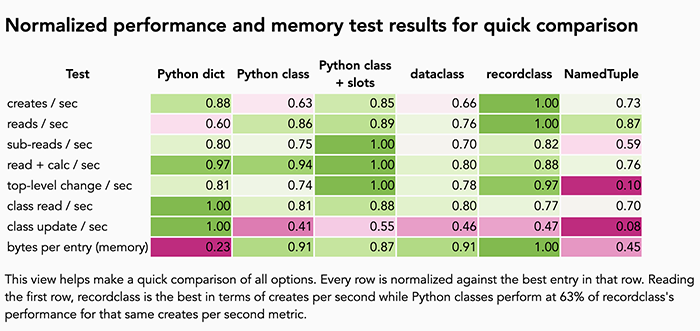

Overall, performance was pretty high across the board. However creation of new objects was consistently slower across all approaches. No doubt this is due to the fact that memory has to be managed at some point. It was encouraging to see Python can generally manage millions of reads and updates per second in a single process/single thread. It’s also pretty apparent that our default dictionary approach does indeed have some cost in terms of memory.

Conclusion: What’s is the best performing approach for managing “objects” in Python?

Let’s just go with the ranked list approach from best to worst:

- Python class + slots – This approach really balanced everything. High performance, low memory usage.

- recordclass – This could have taken the #1 spot, but it’s reads where a bit slower and it’s still considered beta,.

- (Tie) Python class & dataclass – Both of these approaches did pretty well, though creates are slowest in the bunch.

- Python dict – if you don’t care about memory or “class-style” properties, then python dict is very good, but with nearly 4x the memory overhead, it was moved down the list.

- NamedTuple – This approach doesn’t really buy you anything compared to everything else on the list. More memory and slower perf due to working around immutability means it’s the best of no worlds.